Yoshikatsu Nakajima

Keio University, Japan

Title: Viewpoint class based camera pose estimation and object recognition

Biography

Biography: Yoshikatsu Nakajima

Abstract

Camera pose estimation with respect to target scenes is an important technology for superimposing virtual information in augmented reality. However, it is difficult to estimate the camera pose for all possible view angles because feature descriptors such as SIFT are not completely invariant from every perspective. We propose a novel method of robust camera pose estimation using multiple feature descriptor databases generated for each partitioned viewpoint in which the feature descriptor of each keypoint can be almost invariant. Our method estimates the viewpoint class for each input image using deep learning based on the set of training images prepared for each viewpoint class. We introduce two ways of preparing those images for deep learning and generating databases. In the first method, images are generated by Projection matrix to learn more robustly in the environment by changing those background. The second method uses real images to learn the entire environment around the plane pattern. Through the evaluation result, we confirmed that the number of the correct matches increased and the accuracy of camera pose estimation was improved compared to the conventional method.

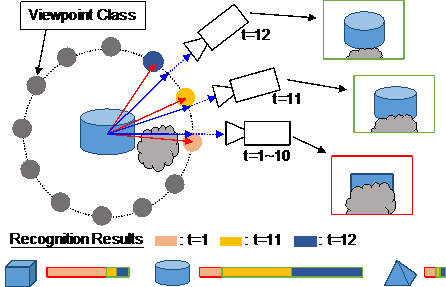

Furthermore, we are trying on applying the concept of Viewpoint Class to the field of Object Recognition recently. Object recognition is one of the major research fields in computer vision and has been applied to various fields. In general, conventional methods are not robust in the obstacle and have a problem such that the accuracy is decreased when the camera stagnates at a poor position to the target object. We propose a novel method of object recognition that can be carried in real time by equally dividing the viewpoint around each object in the scene and impartially integrating the Convolutional Neural Network (CNN) outputs from each Viewpoint Class (See Image). We confirmed its effectiveness through experiments.